Deepseek vs Groq Deepseek API: Price, Benchmark Comparison

Jan 30, 2025A detailed comparison of Deepseek vs Groq Deepseek API, covering pricing, benchmarks, and key features. Understand which platform is best for your AI needs.

The landscape of AI models is rapidly evolving, with new players constantly emerging and established ones pushing the boundaries of performance and accessibility. Understanding the nuances of different platforms and their offerings is crucial for developers and businesses alike. This article provides a comprehensive comparison of Deepseek vs Groq Deepseek API: price, benchmark, synthesizing information from various sources to give you a clear picture of their respective strengths and limitations. We will delve into the pricing structures, performance benchmarks, and key features that differentiate these two approaches to accessing DeepSeek's powerful AI models.

Understanding DeepSeek and Groq's Role

DeepSeek is a Chinese AI lab known for producing cutting-edge models, including the DeepSeek R1 series, which excels in complex reasoning tasks, and the highly efficient DeepSeek V3. These models are often open-source, allowing for greater flexibility and customization. Groq, on the other hand, is a company focusing on fast AI inference, providing a platform for running AI models efficiently. They offer the Groq Deepseek API, which provides a way to access DeepSeek models.

DeepSeek R1 and Groq Cloud Integration

The Groq Deepseek API makes the DeepSeek R1 Distill LLaMA 70B model, a powerful reasoning model, available through GroqCloud™. This integration allows users to leverage the model’s advanced capabilities, including Chain of Thought (CoT) processing, for tasks requiring logical inference and detailed analysis. This model excels at breaking down complex queries into logical steps, resulting in more precise and contextually relevant outputs. Groq's platform emphasizes speed and efficiency, making it a compelling option for users needing fast responses.

Credit: www.geeky-gadgets.com

Credit: www.geeky-gadgets.com

Key Features of DeepSeek R1 Distill LLaMA 70B on GroqCloud™

- Advanced Reasoning: The model is designed for complex queries, excelling in logical inference, detailed analysis, and structured problem-solving.

- Chain of Thought (CoT) Processing: It breaks down complex questions into sequential steps for increased clarity and accuracy.

- Customizable Queries: Users can tailor inputs to meet specific needs across a variety of fields, from scientific research to financial modeling.

- Accessibility: The model is accessed through Groq’s console, designed for ease of use with token-based rate limits.

Pricing Structures: DeepSeek API vs. Groq Deepseek API

A significant point of comparison between Deepseek vs Groq Deepseek API: price, benchmark lies in their pricing models. DeepSeek generally offers transparent pricing, with a caching mechanism to reduce costs for repetitive queries. Groq, in contrast, has a different approach, focusing on access to their inference platform.

DeepSeek API Pricing

DeepSeek’s API, particularly for models like DeepSeek-R1, offers a transparent pricing structure:

- Input Tokens (Cache Miss): $0.55 per million tokens

- Input Tokens (Cache Hit): $0.14 per million tokens

- Output Tokens: $2.19 per million tokens

- DeepSeek V3: Input is $0.14 per million tokens and output is $0.28 per million tokens.

DeepSeek also employs an intelligent caching system that stores frequently used prompts and responses, leading to cost savings of up to 90% for repeated queries. This caching mechanism is automatic and requires no additional fees.

Groq Deepseek API Pricing

While Groq provides access to DeepSeek models, it's important to note that specific pricing details for the Groq Deepseek API can be less transparent than DeepSeek’s direct API. Groq uses a token-based rate limit system. Standard accounts have limitations on requests per day and tokens per minute, though these limits may vary depending on account type. Groq has not disclosed specific pricing information for the DeepSeek R1 70B model.

It's essential to consult Groq's official site for the most up-to-date pricing details, as they can change based on usage and account types. Groq's pricing model is focused around access to their fast inference platform, which can be cost-effective for users needing speed, but may not be directly comparable to the token-based pricing of DeepSeek's direct API.

Performance Benchmarks and Speed

When comparing Deepseek vs Groq Deepseek API: price, benchmark, it’s also important to consider performance. DeepSeek models are known for their strong performance in areas such as mathematical reasoning, coding challenges, and logical problem-solving.

DeepSeek Model Performance

DeepSeek-R1 has demonstrated exceptional results in benchmark tests, often matching or exceeding OpenAI's o1 model in key areas:

- Mathematical Reasoning: Achieves high scores on benchmarks such as MATH.

- Coding Challenges: Outperforms OpenAI's o1 in Codeforces ratings.

- Logical Problem-Solving: Uses chain-of-thought reasoning to break down complex problems.

DeepSeek-V3 is also notable for its efficiency, achieving comparable performance to models like Claude 3.5 Sonnet with significantly reduced training costs.

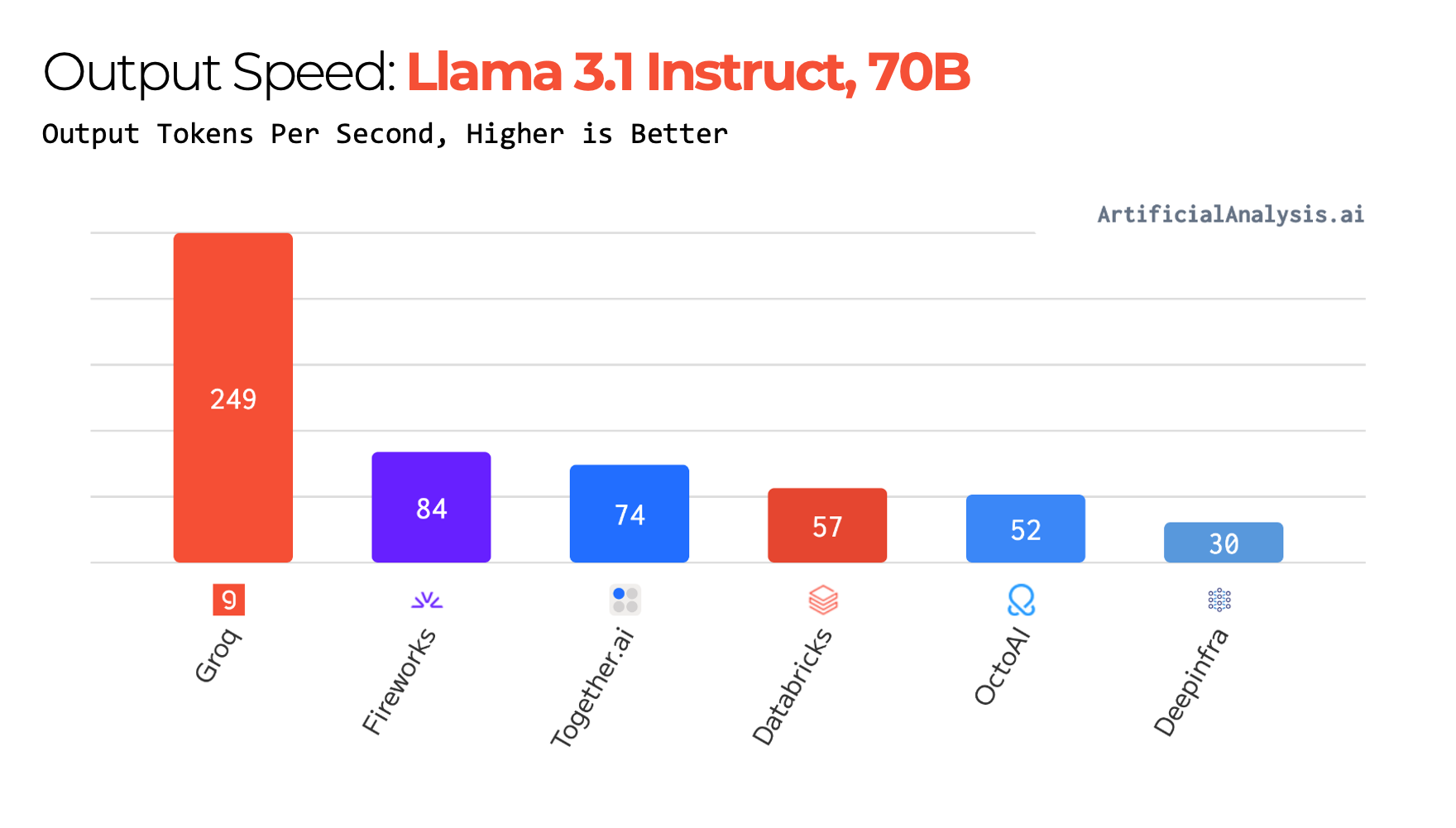

Groq's Focus on Speed

Groq's primary advantage lies in its focus on fast AI inference. Independent benchmarks show that Groq provides instant speed for openly available models, including Llama 3.1, Mixtral, and Gemma. This makes it a strong platform for applications where low latency is critical. The Groq Deepseek API allows users to leverage this speed when using DeepSeek models.

Credit: groq.com

Credit: groq.com

Practical Considerations: Accessing and Using the Models

Accessing DeepSeek models can be done through either the official DeepSeek API or via the Groq Deepseek API.

DeepSeek API Usage

The DeepSeek API is designed for ease of use, with robust customization options for developers. It uses a standard API structure allowing for direct integration of the model into projects. DeepSeek also provides the option of running open source models locally.

Groq Deepseek API Usage

The Groq Deepseek API is accessed through Groq’s console. This console is intended to streamline interaction with the platform's AI tools, offering a user-friendly interface for inputting queries, managing tasks, and monitoring performance. Groq's API is designed to be compatible with OpenAI's endpoint, allowing for easier integration of Groq into existing workflows.

Limitations and Future Prospects

Both DeepSeek and Groq have their respective limitations. DeepSeek is a relatively new player and may not have the same level of community support as the more established companies. Groq, despite its focus on speed, has limited model options, with pricing for the Groq Deepseek API not always clear.

However, both companies are actively developing their technologies and expanding their offerings. DeepSeek is likely to continue to enhance performance, improve usability, and expand accessibility, further solidifying its position as a leader in the AI industry. Groq’s focus on speed and efficiency makes it a compelling option for users seeking low-latency inference.

Conclusion: Choosing Between DeepSeek and Groq Deepseek API

The choice between DeepSeek's official API and the Groq Deepseek API depends largely on specific requirements. If transparency in pricing and direct control over the model are priorities, DeepSeek’s API might be a better fit. If low-latency inference and ease of integration with existing OpenAI-compatible workflows are more important, the Groq Deepseek API may be the preferred choice.

Both platforms offer powerful access to DeepSeek's cutting-edge models, and the ultimate choice lies in evaluating individual needs, priorities, and budget constraints. Carefully consider the pricing structures, performance benchmarks, and ease of integration before making a decision. The AI landscape is constantly evolving, and keeping abreast of the latest developments is key to selecting the optimal platform for your specific needs.